| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

- Injection

- error fix

- idapython

- debugging

- why error

- malware

- ida

- Ransomware

- pytest

- Analysis

- TensorFlow

- NumPy Unicode Error

- data distribution

- idb2pat

- javascript

- Python

- x64

- Rat

- svn update

- open office xml

- hex-rays

- ida pro

- ecma

- MySQL

- error

- h5py.File

- commandline

- mock.patch

- 포인터 매핑

- idapro

- Today

- Total

13 Security Lab

Deep Dive into Dark Web Research using Tor Crawler 본문

Deep Dive into Dark Web Research using Tor Crawler

Maj0r Tom 2021. 2. 21. 01:09

What is Dark Web Tor Crawler

Dark Web Tor Crawler is a classifier and search engine that collects and classifies unknown information by crawling inside sites targeting hidden onion links and removing fog from gray space.

In the deep web or dark web, it is not easy to judge the attributes of a site because information is generally hidden. The purpose is to determine the nature of Tor websites by collecting and classifying them.

Kewords: tor network, tor browser, onion domain, dark-web, tor crawler, digital-forensic

Understanding dark-web(deep web) , Deep Web Crawling Technique

What is Tor and Tor Project ?

The Onion Router, project name

Tor is an abbreviation for 'The Onion Router'. One of the tools we use for network bypass and anonymization

It means also anonymity network. It used for the deep web.

"Tor" refers to the Tor project, but it is important to note that other people use it interchangeably because it includes Tor browser and Tor network.

More thing is For more details, please refer to the link below.

▶ Learn about Tor Project

Introduction to Tor Deep Web

Deep web sites are not easy to search because the site address is hidden like its characteristic.

Deep web site (onion url = onion link = tor link)

Even if you obtain a public address through multiple channels, it is difficult to obtain complete information about deep web link site, and it may contain hidden content.

I think, the reason for using the deep web or the dark web is probably they want to do things that are reluctant to be public and searchable.

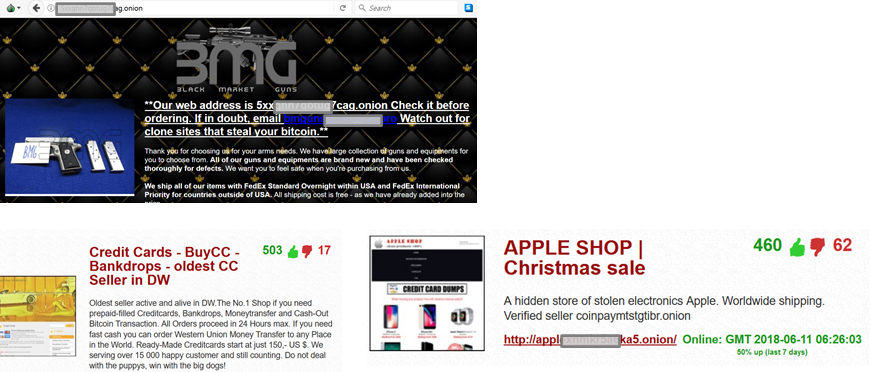

So this is expected to consist of illegal things. The sites look like sites for legitimate purposes, but they contain hidden sites inside, so they are expected to be available for use in drug sales.

What is illegal things in Tor Deep Web?

- Weapon

- Stolen goods

- Hacking

- Drugs

- Prostitution

- Child pornography

Generally difficult to share publicly on the Internet.

What is problem in Dark Web Tor Crawler

As mentioned earlier, there are some problems that needs to be solved to develop The Dark Web Tor Crawler.

The first is that difficult to find the onion hidden link.

Because of Deep Web means a web that has not been searched, to find a site, you must obtain site information from someone you know or you need to barely get a hidden link from a few public sites.

The deep web public wiki in google is best way to get links form public sites. It has a collection of site addresses and brief introductions to Tor websites such as "The Hidden Wiki" and "Dream Market".

Second, it is difficult to get the content of the site.

To browse the Tor site, you need to access the website through the Tor browser, and the speed is slow. On the other hand, there are a number of sites that require login and operate privately.

Third, even if you get an address, it is difficult before you find out what the site is. As mentioned, some sites require you to log in, and in the case of hidden wikis, you don't know what content and how much it will contain before entering the website. Most of these sites don't seem to be "famous", so they don't have the same popularity or reputation as the ones we're familiar with, so it's hard to trust them.

How to Explore to Deep Wep Space ?

Then, using Tor Crawler, I will derive a method to investigate bad sites on the dark web. Find out what these sites are for and what kind of character they are through the methods you have derived.

There is a possibility to do the next things. To see the title, it seems that it was made for the purpose of some general hobby, but the content has an irrational thing or the site content contains a normal one, but there is another hidden link inside, so if you ride the link, something illegal will appear.

This is an idea approach that anyone can easily come up with.

Open onion page for deep web

Get links from public web site pages like "Hidden Wiki". or Extracts link pages from sites that have created search engines with similar characteristics.

Public Search Engine

Collects Tor page or link information that is rarely collected by Google.

Access may not be possible, but limited information can be collected through the "Cached Page" function.

Navigating in links

After get the site address, follow the link and navigate inside the site.

It is possible that there is another onion link in the site, and if so, start a new search.

Developing Tor Crawler Engine using TF-IDF

Crawling the TOR website is a little different from regular website crawling.

Because of the tool entering a well-known site, It should be able to parse various types of site structure. and web site information should be collected in the method of random visits.

When the tool search for what I want, it does not come out well, so it is necessary to classify what the searched site is dealing with. and I use TF-IDF here.

What is TF-IDF ?

TF-IDF (Term Frequency-Inverse Document Frequency) is a weight used in information retrieval and text mining, and is a statistical value indicating how important a word is in a specific document when there is a document group consisting of multiple documents.

▶ Learn about TF-IDF

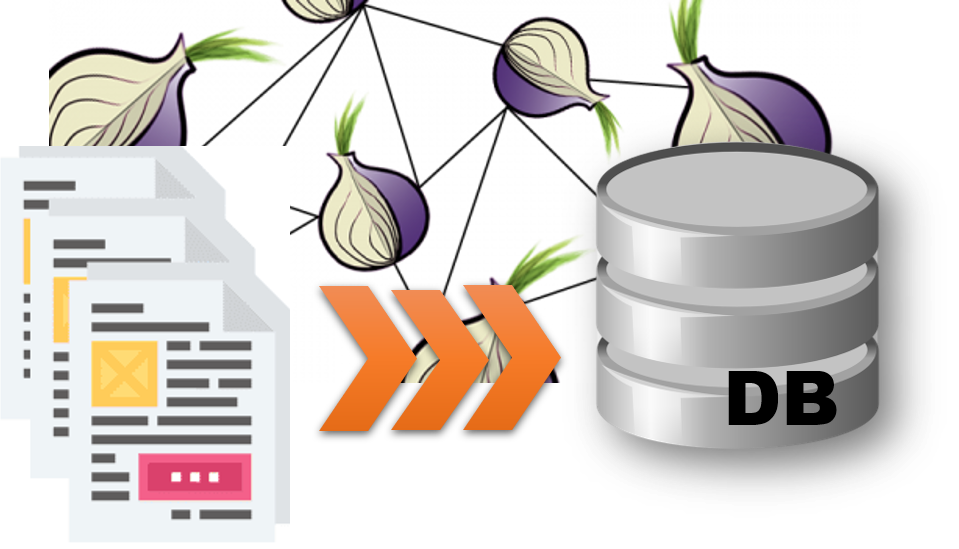

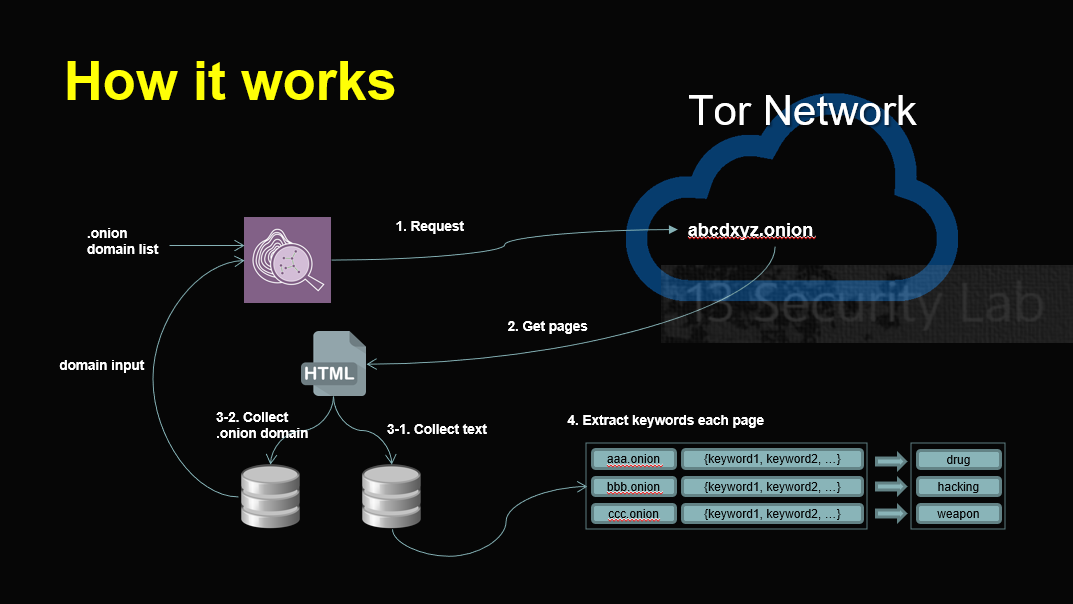

Design of Tor Crawler Engine

Process for Dark Web Tor Crawler Development

- Collect Tor hidden website links (onion links) in tor network

- Expected information to be collected: hidden-pages such as drugs and prostitution

- Dynamically Crawling hidden sites on the dark web

- Build a database of crawled site information

- Classify the constructed information based on TF-IDF and enter it into the DB

- Build a dark web based search engine based on DB

Considerations

1. Check how to use the Tor protocol

Is it necessary to use a browser? Is a library available? Is it possible to import python?

2. Check if there is a tor crawler implemented previously.

If so, can you use it? How user friendly is it?

3. How to batch process multiple languages?

Foreign languages should be translated and imported: Google cloud translation API Documentation

Translated into English and brought.

4. How to Investigate ?

Designing to be able to crawling and retrieve links from links on any page

It is needed to be identified each Tor URL found

It is needed to be verified whether the URL is available

5. How to prove ?

List and save suspicious URLs.

Crawling for onion domains in active state

6. Extract keywords from pages and express them so that they can indicate the categories of the page

Goal

It extracts information that can identify whether there is a suspicious circumstances of bad things in the Onion domain.

If there is a page with a hidden purpose on the website, it finds the hidden information. and it collect and classify them.

Crawling Results

The dark web crawler stores collected domains and related information.

Reference

ACHE Crawler(Crawling Dark Web Sites on the TOR network)

ache.readthedocs.io/en/latest/tutorial-crawling-tor.html

torbot

github.com/DedSecInside/TorBoT